Learn TensorFlow for Deep Learning (code-first course)

Like to code? Looking to learn deep learning? Like to learn hands-on? This course will teach you deep learning and help you learn TensorFlow through 60+ hours of code-along videos.

Short version

I teach a beginner-friendly, apprenticeship style (code along) TensorFlow for Deep Learning course, the follow on from my beginner-friendly machine learning and data science course.

Going through it will help you learn TensorFlow (a machine learning framework), deep learning concepts (including neural networks) and how to pass the TensorFlow Developer Certification.

You can sign up on the Zero to Mastery Academy.

You might also find these resources helpful:

- 💻 All of the TensorFlow for Deep Learning course materials on GitHub

- 🎥 Watch the first 14-hours of the course on YouTube (notebooks 00, 01, 02)

- 📖 Read the beautiful online book version of the course

- 🤔 Got questions about the course? Check out the livestream Q&A for the course launch

Longer version

Look, you can learn all of this yourself. You know how to search, you know how to read.

The beauty of a course is its curation. It gathers many important concepts in one place and teaches them in a sequential fashion.

The TensorFlow for Deep Learning course is no different.

If you want to learn TensorFlow and fundamental deep learning concepts in a side-by-side way (as in, I code, you code), you're not going to find a better resource online.

Testimonials

Many people have gone through the course, gained TensorFlow skills and have passed the TensorFlow Developer Certificate Exam.

Getting TensorFlow Developer Certified (how I did it)

During lockdowns of 2020, I got TensorFlow Developer Certified. And I made a video about it which people seemed to enjoy.

A lot of questions came in asking how I did it.

I answered with “I read the Hands-on Machine Learning Book end-to-end and then went through the practice questions for the exam.”

As I said, you could take the same steps.

Or you could take the TensorFlow for Deep Learning course.

I designed it specifically to help those who like writing (Python) code to start writing deep learning code as soon as possible.

The course is a perfect entry point for someone who likes to learn hands-on as soon as possible and then refer to texts and books as references later on.

It's also the perfect entry point for someone whose primary goal is learning how to use TensorFlow for Deep Learning but may want to take the TensorFlow Developer Certification exam later on.

Specifically, the Zero to Mastery TensorFlow for Deep Learning course will teach you:

- Fundamental concepts of deep learning (we teach concepts using code)

- How to write TensorFlow code to do (1) (lots of it)

- How to pass the TensorFlow Developer Certification (optional, but requires 1 & 2)

Certifications are nice to have not need to have

I got asked whether the certification is necessary in a livestream Q&A about the course.

It’s not.

In the tech field, no certification is needed. If you have skills and demonstrate those skills (through a blog, projects of your own, a nice-looking GitHub), that is a certification in itself.

A certification is only one form of proof of skill.

Rather than an official certification, I’m a big fan of starting the job before you have it. For example, if you have some ideal role you’d like to work for a company as. Say, a machine learning engineer. Use your research skills to figure out what a machine learning engineer would do day to day and then start doing those things.

Use courses and certifications as foundational knowledge then use your own projects to build specific knowledge (knowledge that can’t be taught).

“Do certificates guarantee a job?”

— Daniel Bourke (@mrdbourke) April 22, 2021

No

Nothing guarantees a job

Courses (all of them) are a compressed form of knowledge

Use courses/certificates to build foundations

Then build upon those foundations by tinkering on your own projects

(start the job before you have it)

How is the course taught?

It’s taught code-first, code-along, apprenticeship style. This means in every video where a new concept is being introduced, it gets introduced using code.

The primary focus is to help you learn TensorFlow and deep learning by writing code.

Who should take the course?

This is a beginner-level course.

If you’ve got 1+ years of experience with deep learning/TensorFlow/PyTorch (PyTorch is another deep learning framework like TensorFlow), you shouldn’t take it, use your skills to make something instead.

This course is for coders with ~6-months of experience writing Python code who want to learn about deep learning and how to build neural networks for various problems using TensorFlow.

What are the prerequisites?

Even though it’s for beginners, there are some prerequisites I’d recommend.

- 6+ months writing Python code. Can you write a Python function that accepts and uses parameters? That’s good enough. If you don’t know what that means, spend another month or two writing Python code and then come back here.

- At least one beginner machine learning course. Are you familiar with the idea of training, validation and test sets? Do you know what supervised learning is? Have you used pandas, NumPy or Matplotlib before? If no for any of these, I’d go through at least one machine learning course that teaches these first and then coming back.

- Comfortable using Google Colab/Jupyter Notebooks. This course uses Google Colab throughout. If you have never used Google Colab before, it works very similar to Jupyter Notebooks with a few extra features. If you’re not familiar with Google Colab notebooks, I’d suggest going through the Introduction to Google Colab notebook.

- Plug: The Zero to Mastery beginner-friendly machine learning course (I also teach this) teaches all of the above (and this course is designed as a follow-on).

With this being said, you can watch the first 14-hours (see notebooks 00, 01, 02 below) of the course on YouTube. If you’re interested, I’d encourage you to try it out and see how you go.

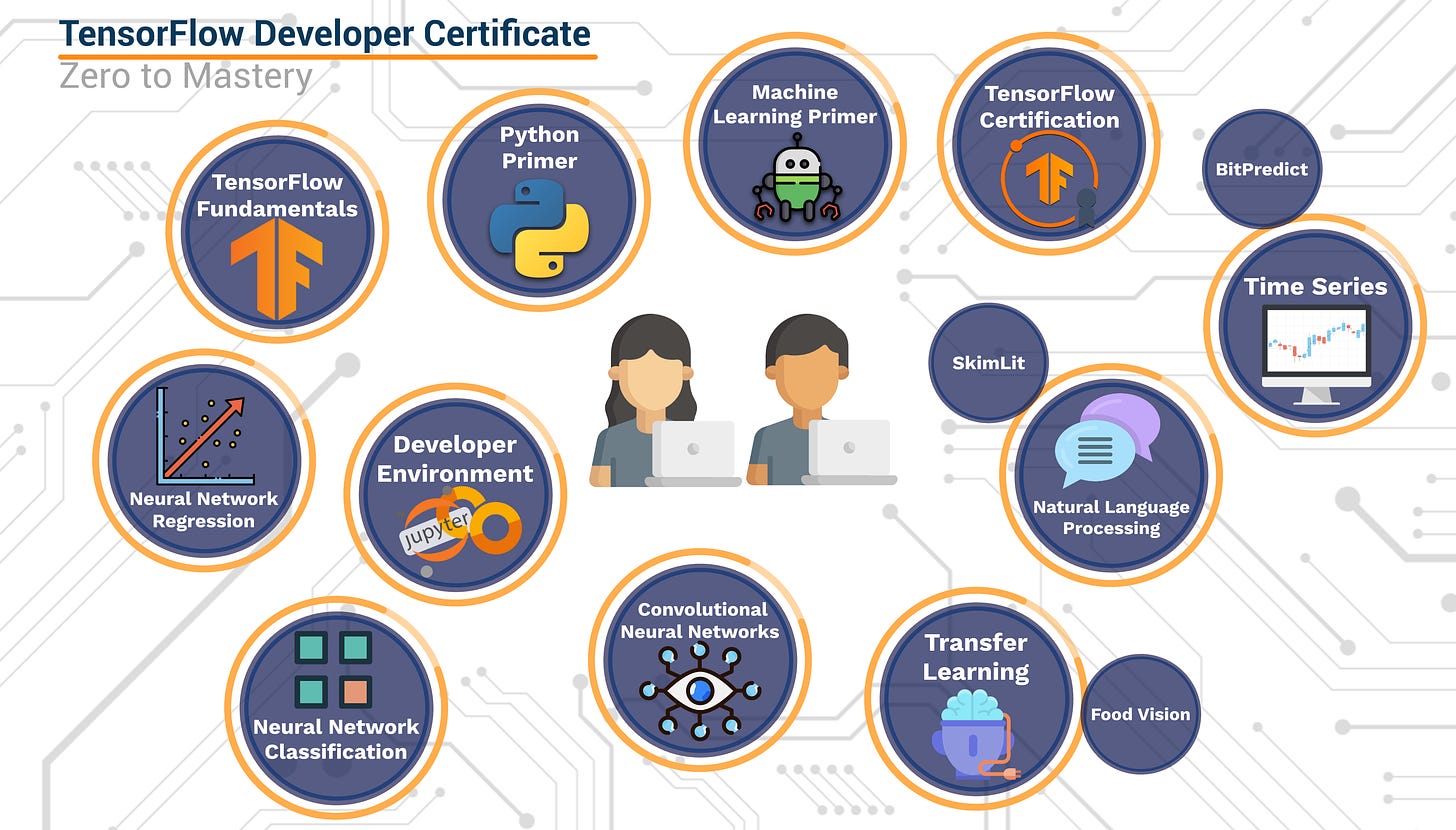

What’s in the course?

You can see all of the materials on the course GitHub (this is the source of truth for the course, all major announcements/updates/Q&A will go here as well).

The course is broken down into 11 notebooks (starting from 0), all of which cover something specific about TensorFlow or deep learning.

Notebook 0 — Learn TensorFlow Fundamentals

- Introduction to tensors (creating tensors with TensorFlow)

- Getting information from tensors (tensor attributes)

- Manipulating tensors (tensor operations)

- Tensors and NumPy

- Using @tf.function (a way to speed up your regular Python functions)

- Using GPUs with TensorFlow (a hardware accelerated way to speed up computation)

Key takeaways:

- Learn how to create and manipulate the fundamental building block of deep learning: the tensor

- Learn to represent data as numbers (so it can be used with a machine learning model)

Notebook 1 — Learn Neural Network Regression with TensorFlow

- Discussing the architecture of a neural network regression model (a model which predicts a number such as the price of a house)

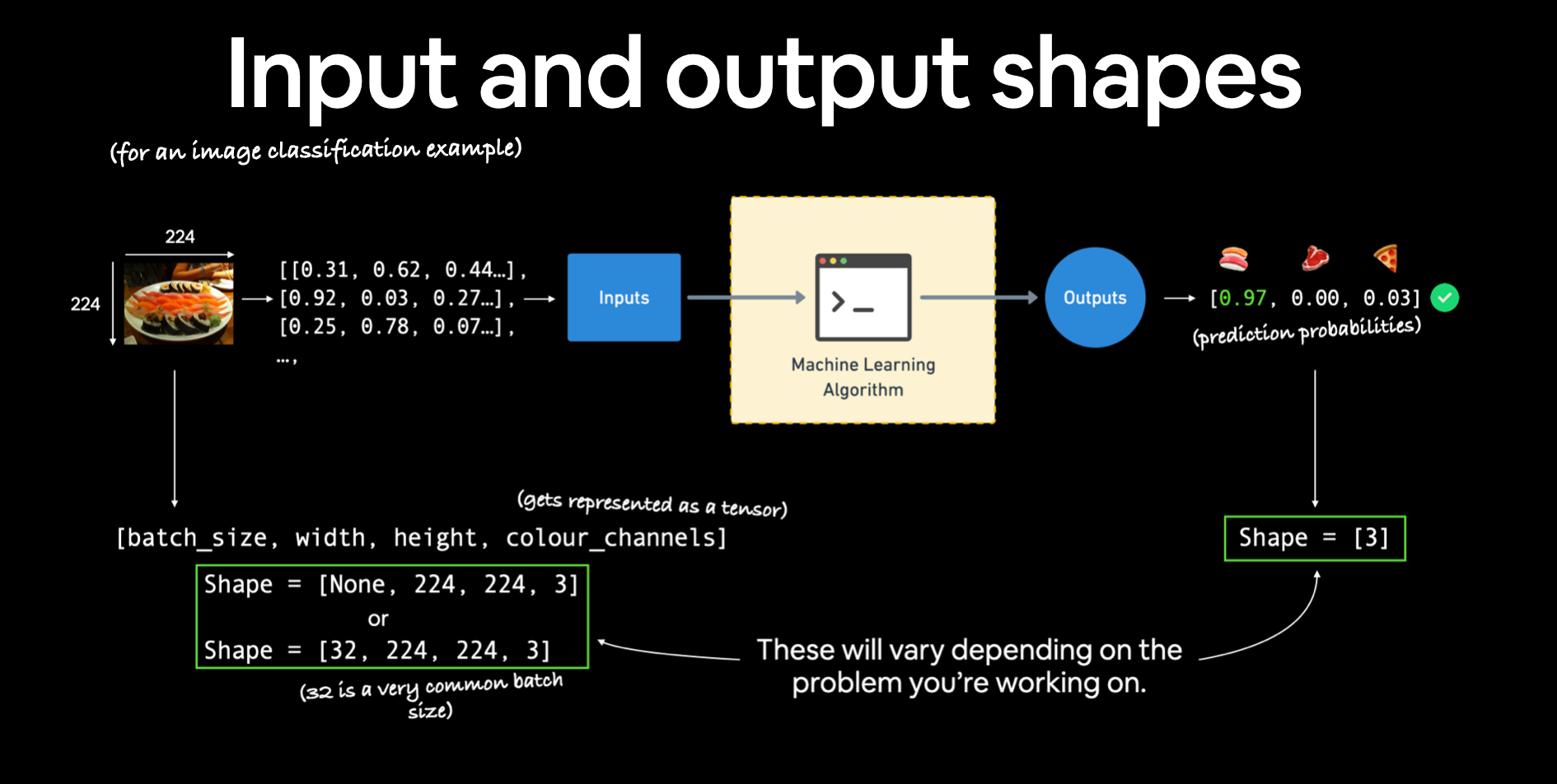

Input shapes and output shapes of a regression model:

X: features/data (inputs)y: labels (outputs)- Creating custom data to view and fit

Steps in modelling with TensorFlow

- Creating a model:

Compiling a model:

- Defining a loss function

- Setting up and choosing an optimizer

- Creating evaluation metrics

- Fitting a model (getting it to find patterns in our data)

Evaluating a regression model:

- Visualizing the model ("visualize, visualize, visualize")

- Looking at training curves

Compare predictions to ground truth (using regression evaluation metrics):

- MAE — mean average error

- MSE — mean square error

- Saving a model (so we can use it later)

- Loading a model

Key takeaways:

- Build TensorFlow sequential models with multiple layers

- Prepare data for use with a machine learning model

- Learn the different components which make up a deep learning model (loss function, architecture, optimization function)

- Learn how to diagnose a regression problem (predicting a number) and build a neural network for it

Notebook 2 — Learn Neural Network Classification with TensorFlow

- Breaking down the architecture of a neural network classification model

- Input shapes and output shapes of a classification model

X: features/data (inputs)y: labels (outputs)- "What class do the inputs belong to?"

- Creating custom data to view and fit

Steps in modelling for binary and multi-class classification:

- Creating a model

Compiling a model:

- Defining a loss function

- Choosing an optimizer

Setting up an optimizer (an algorithm to help your models learn):

- Finding the best learning rate (a value that decides how fast a model learns)

- Creating evaluation metrics

- Fitting a model (getting it to find patterns in our data)

- Improving a model

- The power of non-linearity

Understand different classification evaluation methods:

- Visualizing the model ("visualize, visualize, visualize")

- Looking at training curves

Compare predictions to ground truth (using classification evaluation metrics):

- Precision

- Accuracy

- Recall

- F1-score

Key takeaways:

- Learn how to diagnose a classification problem (predicting whether something is one thing or another)

- Build, compile & train machine learning classification models using TensorFlow

- Build and train models for binary and multi-class classification

- Plot modelling performance metrics against each other

- Match input (training data shape) and output shapes (prediction data target)

Notebook 3 — Computer Vision and Convolutional Neural Networks with TensorFlow

- Getting an image dataset to work with (computer vision models need images of some kind)

- Architecture of a convolutional neural network (CNN)

- A quick end-to-end example (what we're working towards)

Learn steps in modelling for binary image classification with CNNs:

- Becoming one with the data (inspecting and understanding your data)

- Preparing data for modelling (using ImageDataGenerator)

- Creating a CNN model (starting with a baseline)

- Fitting a model (getting it to find patterns in our data)

- Evaluating a model

- Improving a model

- Making a prediction with a trained model

Learn steps in modelling for multi-class image classification with CNNs:

- Same as above (but this time with a different dataset)

Key takeaways:

- Build convolutional neural networks with Conv2D and pooling layers

- Learn how to diagnose different kinds of computer vision problems

- Learn how to build computer vision neural networks

- Learn how to use real-world images with your computer vision models

Notebook 4 — Learn Transfer Learning with TensorFlow Part 1: Feature Extraction

- Introduce transfer learning (leveraging what one model has learned somewhere else and how to adjust it to our own problem, a way to beat all of our old self-built models)

- Using a smaller dataset to experiment faster (10% of training samples of 10 classes of food)

- Build a transfer learning feature extraction model using TensorFlow Hub

- Introduce the TensorBoard callback to track model training results

- Compare model results using TensorBoard

Key takeaways:

- Learn how to use pre-trained models to extract features from your own data

- Learn how to use TensorFlow Hub for pre-trained models

- Learn how to use TensorBoard to compare the performance of several different models

Notebook 5 — Learn Transfer Learning with TensorFlow Part 2: Fine-tuning

- Introduce fine-tuning, a type of transfer learning to modify a pre-trained model to be more suited to your data

- Using the Keras Functional API (a different way to build models in Keras)

- Using a smaller dataset to experiment faster (10% of training samples of 10 classes of food)

- Data augmentation (how to make your training dataset more diverse without adding more data)

Learn all of the above through running a series of modelling experiments on our Food Vision data:

- Model 0: a transfer learning model using the Keras Functional API

- Model 1: a feature extraction transfer learning model on 1% of the data with data augmentation

- Model 2: a feature extraction transfer learning model on 10% of the data with data augmentation

- Model 3: a fine-tuned transfer learning model on 10% of the data

- Model 4: a fine-tuned transfer learning model on 100% of the data

- Introduce the ModelCheckpoint callback to save intermediate training results

- Compare model experiments results using TensorBoard

Key takeaways:

- Learn how to set up and run several machine learning experiments

- Learn how to use data augmentation to increase the diversity of your training data

- Learn how to fine-tune a pre-trained model to your own custom problem

- Learn how to use Callbacks to add functionality to your model during training

Notebook 6 — Learn Transfer Learning with TensorFlow Part 3: Scaling Up (Food Vision mini)

- Downloading and preparing 10% of the Food101 data (10% of training data)

- Training a feature extraction transfer learning model on 10% of the Food101 training data

- Fine-tuning our feature extraction model

- Saving and loading a trained model

- Evaluating the performance of our Food Vision mini model trained on 10% of the training data

Making predictions with our Food Vision mini model on custom images of food:

- Finding the most wrong predictions (a way to use a trained model to figure out where it's performing well and not so well)

Key takeaways:

- Learn how to scale up an existing model

- Learn how to evaluate your machine learning models by finding the most wrong predictions

- Beat the original Food101 paper using only 10% of the data

Notebook 7 — Milestone Project 1: Food Vision 🍔👁

Combine everything you've learned in the previous 6 notebooks to build Food Vision: a computer vision model able to classify images of 101 different kinds of foods

- Our model well and truly beats the original Food101 paper

Notebook 8 — Learning NLP Fundamentals in TensorFlow

- Learn to diagnose a natural language processing problem

- Downloading a text dataset

- Visualizing text data

- Converting text into numbers using tokenization

- Turning our tokenized text into an embedding

Key takeaways:

Learn to:

- Preprocess natural language text to be used with a neural network

- Create word embeddings (numerical representations of text) with TensorFlow

Build neural networks capable of binary and multi-class classification using:

- RNNs (recurrent neural networks)

- LSTMs (long short-term memory cells)

- GRUs (gated recurrent units)

- CNNs

- Learn how to evaluate your TensorFlow NLP models

- Find the most wrong predictions of a model

Notebook 9 — Milestone Project 2: SkimLit 📄🔥

- Replicate the model which powers the PubMed 200k paper to classify different sequences in PubMed medical abstracts (which can help researchers read through medical abstracts faster)

Key takeaways:

- Writing a preprocessing function to prepare our data for modelling

- Setting up a series of modelling experiments

- Building our first multimodal model (taking multiple types of data inputs)

- Replicating the model architecture from a machine learning research paper

- Find the most wrong predictions

- Making predictions on PubMed abstracts from the wild

Notebook 10 — Learn Time Series fundamentals in TensorFlow & Milestone Project 3: BitPredict 💰📈

- Learn how to diagnose a time series problem (building a model to make predictions based on data across time, e.g. predicting the stock price of AAPL tomorrow)

- Prepare data for time series neural networks (features and labels)

- Understanding and using different time series evaluation methods

- Build time series forecasting models with TensorFlow

Key takeaways:

- Setting up a series of deep learning modelling experiments

- Ensembling (combining multiple models together)

- Multivariate models (models with multiple data inputs)

- Replicating the N-BEATS algorithm using TensorFlow layer subclassing

- Creating a modelling checkpoint to save the best performing model during training

- Making predictions (forecasts) with a time series model

- Creating prediction intervals for time series model forecasts

- Discussing two different types of uncertainty in machine learning (data uncertainty and model uncertainty)

- Demonstrating why forecasting in an open system is BS (the turkey problem)

After the code: preparing for the TensorFlow Developer Certification

As I said before, the certification is optional. And after going through the above sections, you’ll well and truly have the skills to prepare and take on the TensorFlow Developer Certification yourself.

The Preparing for the TensorFlow Developer Certificaiton section walks through the what, why and how of the TensorFlow Developer Certification Exam.

Of course, there's no secrets from the actual exam itself (that would cheating), more so, this section gives tips on how to practice the skills you've learned before taking the exam.

Get ready to dream in tensors

If you've got a curiosity to learn, you're ready to go.

But beware, when you learn how to think about a machine learning problem (or deep learning problem) you'll start to see the world anew.

All of a sudden, you'll be imagining how you can use deep learning to solve problems you face in everyday life.

And because you've learned TensorFlow (after going through the course), you'll have the skills to start building solutions to those problems.

Don't blame me. You've been warned.

If you're ready to learn TensorFlow, harness the powers of deep learning, join a fun community full of people eager to support and challenge you and dream in tensors, I'll see you in the course.

PS if you've got any further questions, leave a note on the GitHub discussions page (that way others can see it too) and myself or someone from the course will get to it.