Apple's New M1 Chip is a Machine Learning Beast

Comparing a (nearly) top spec Intel-based 16-inch MacBook Pro to the new Apple silicon MacBook Air and 13-inch MacBook Pro.

I watched the keynote and saw the graphs, the battery life, the instant wake. And they got me. I started to think, how could one of these new M1-powered MacBooks make their way into my life?

Of course, I didn't need one but I kept wondering what story could I tell myself to justify purchasing another computer? Then I had it. My 16-inch MacBook Pro is too heavy to carry around all the time. Yeah, that'll do. This 2.0 kg aluminium powerhouse is too much to be galavanting.

Wait... 2.0 kg, as in, 4.4 pounds?

That's it?

Yes.

Wow. It's not even that heavy.

C'mon now... let's not let the truth get in the way of a good story.

I had it. My reason for placing an order on a shiny new M1 MacBook (or two). My 16-inch MacBook is too heavy to lug around to cafes and write code, words, edit videos and check emails sporadically.

And Apple seems to think their new M1 chip is 11x, 15x, 12x, 3x faster on a bunch of different things. Thought-provoking numbers but I've never measured any of these in the past.

All I care about is: can I do what I need to do, fast.

The last word of the previous sentence is the most important. I've become conditioned. Speed is a part of me now. Ever since the transition from hard drives to solid state drives. And I'm not going back.

I bought the 16-inch in February 2020. I'd just completed a large project and was flush with cash, so I decided to future proof my work station. Since I edit videos daily and hate lag, I opted for the biggest dawg I could buy and basically maxed everything except for the storage (see the specs below).

Thankfully I've still got a friend at Apple who was able to apply their employee discount to the beast (shout out to Joey).

Anyway, we've discussed my primary criteria: speed. Let's consider the others:

- Speed. If it's not fast, get lost.

- Cost. A big factor but I didn't mind paying for the higher spec machine nor do I mind paying for a quality computer. It's my primary tool. I use it to make art, I use it to make money, I use it to learn, I use it to communicate to the world.

- Portability. I don't like sitting in an office all day. Can I take this thing to a cafe or library for a few hours without searching for a powerpoint? Consider portability a combination of battery life and weight.

Why test/compare them

Why not?

But really, I'm a nerd. And an Apple fan. Plus, I wanted to see how my big-dawg-almost-top-of-the-line 16-inch MacBook Pro faired against the new M1 chip-powered MacBook's.

Plus, I can't remember being this excited for a new computing device since the original iPhone.

Other reasons include: carrying around a lighter laptop and tax benefits (if I buy another machine before the end of the year, I can claim it on tax).

Mac specs

Whenever I buy a new machine, I usually upgrade the RAM and the storage at least a step or two from baseline.

512GB storage and 16GB RAM seems to be the minimum for me these days (seriously, who is running a 128GB MacBook effectively?).

So for the M1 MacBook's, I upgraded both of their RAM from 8GB to 16GB and for the 13-inch Pro, I upgraded from 256GB to 512GB storage.

The 16-inch MacBook is my current machine, which I've never had a problem with until running the tests below.

| MacBook Air (M1) | MacBook Pro 13-inch (M1) | MacBook Pro 16-inch (Intel) | |

|---|---|---|---|

| CPU | 8-core M1 | 8-core M1 | 2.4GHz 8-core Intel Core i9 |

| GPU | 7-core M1 | 8-core M1 | AMD Radeon Pro 5500M with 8GB of GDDR6 memory |

| Neural engine | 16-core M1 | 16-core M1 | NA |

| Memory (RAM) | 16GB | 16GB | 64GB |

| Storage | 256GB | 512GB | 2TB |

| Weight | 1.29 kg (2.8 pounds) | 1.4 kg (3.1 pounds) | 2.0 kg (4.4 pounds) |

| Battery life (quoted) | ~15 hours | ~20 hours | ~11 hours |

| Software | macOS Big Sur 11.0.1 | macOS Big Sur 11.0.1 | macOS Big Sur 11.0.1 |

| Price (actual)* | $1,899 AUD ($1,430 USD) | $2,599 AUD ($1,950 USD) | $6,649 AUD ($5,000 USD) |

| Price (baseline)** | $1,899 AUD ($1,430 USD) | $2,299 AUD ($1,730 USD) | $6,049 AUD ($4,550 USD) |

- *Price (actual) is the actual price I paid for each model. Note for the MacBook Pro 16-inch, I actually paid ~$5,500AUD since I have a friend who works at Apple and applied his employee discount (thank you Joey).

- **Price (baseline) is the price you'd pay if you upgraded all processing components (e.g. 8GB -> 16GB RAM on the M1 models and 2.3GHz -> 2.4GHz on the Intel model) except storage (since storage is usually the most expensive upgrade).

The tests

Apple's graphs were impressive. And the GeekBench scores were even more impressive. But these are just numbers on a page to me. I wanted to see how these machines performed doing what I'd actually do day-to-day:

- Writing words. I assume they all perform well at this.

- Browsing the web. Same as above.

- Editing videos. One of primary uses I was interested in and one of the main reasons I bought the 16-inch MacBook Pro with dedicated GPU.

- Writing code. A text editor doesn't require much but Xcode is getting pretty hefty these days.

- Training machine learning models. I write a lot of machine learning code. I don't expect to be able to train state-of-the-art models on a laptop but at least being able to tweak things/experiment would be nice.

Reflecting on the above, I devised three tests:

- Video exporting with Final Cut Pro. I made a pretty hefty video earlier in the year (2020 Machine Learning Roadmap), it's 2 hours, 37 minutes+ long. So I figured it'll be cool to see how long each machine takes to export it.

- Machine Learning Model training with CreateML. Apple's black-box machine learning model creation app. I don't any large Xcode files handy but I decided to see how the CreateML app handles training machine learning models on the new silicon.

- Native TensorFlow code using

tensorflow_macos. The test I was most excited for. Apple and TensorFlow published a blog post saying the new TensorFlow for macOS fork sped up model training dramatically on the new M1 chip. Are these claims true?

Why not test more extensively?

These are enough for me. I've got other sh*t to do.

Alright, time for the results. The best results for each experiment have been highlighted in bold.

Experiment 1: Final Cut Pro video export

For this one, all machines were given ample time to pre-render the raw footage. So when the export button got clicked, they all should've been relatively on the same page.

Experiment details:

- Video length: 2 hours, 37 minutes+

- Export file size: 26.6GB (quoted), ~6.5GB (actual)

| MacBook Air (M1) | MacBook Pro 13-inch (M1) | MacBook Pro 16-inch (Intel) | |

|---|---|---|---|

| Export time | 38 minutes, 10 seconds | 32 minutes, 38 seconds | 21 minutes, 23 seconds |

| Starting battery life | 89% | 92% | 87% |

| Ending battery life | 78% | 83% | 51% |

| Battery life change | -11% | -9% | -36% |

No surprise here, the 16-inch MacBook Pro exported in the fastest time. Most likely because of the dedicated 8GB GPU or 64GB of RAM.

However, it seems using the dedicated GPU came at the cost of battery life drain and fan speed (in the video you can hear the fans going off like a jet).

During the video export, the M1-powered MacBook Air and MacBook Pro 13-inch remained completely silent (the MacBook Air had no choice, it doesn't have a fan but the MacBook Pro 13-inch's fan never turned on).

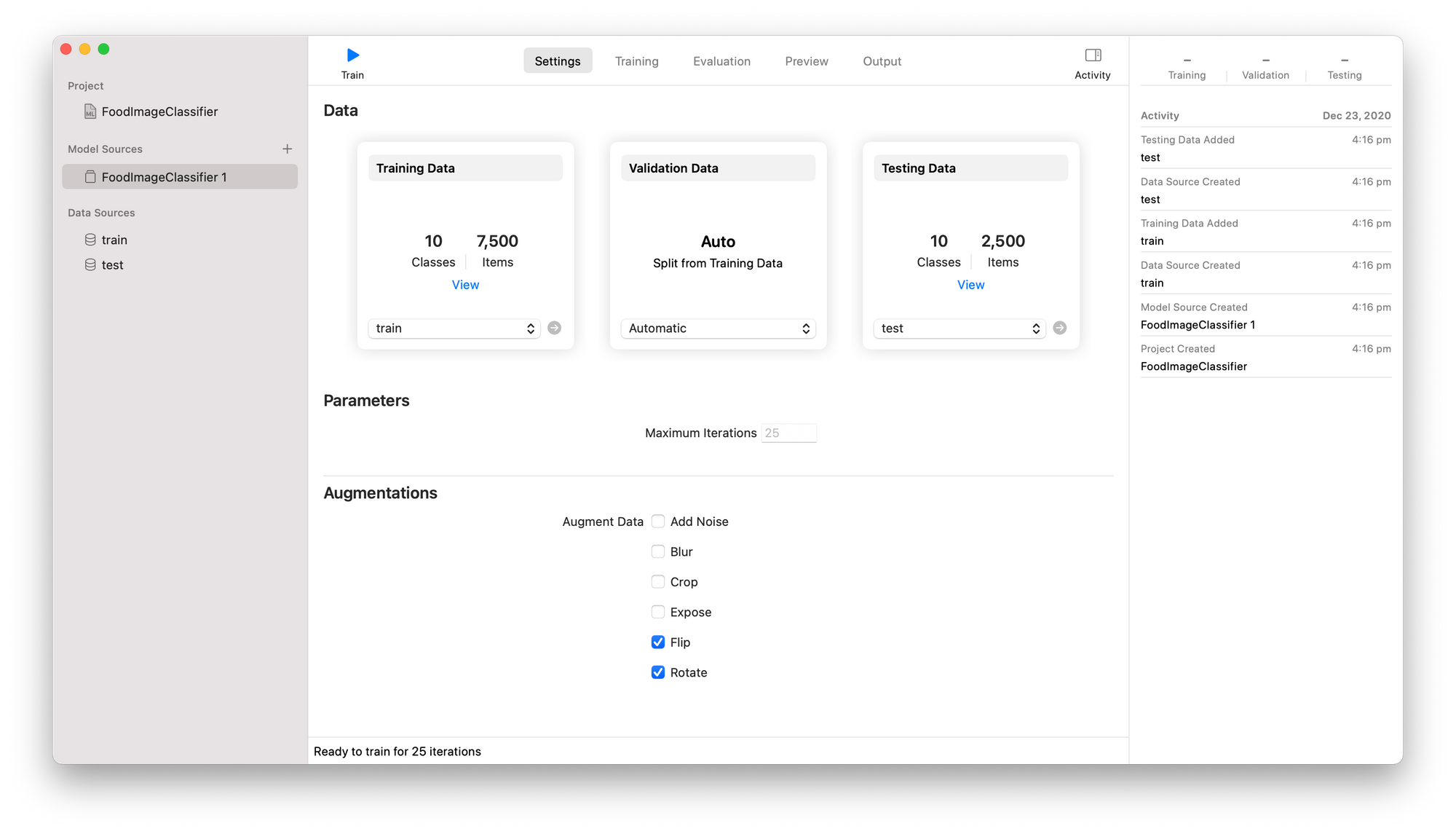

Experiment 2: CreateML machine learning model training

I've never actually used a machine learning model trained by CreateML. However, I decided to see how one of Apple's custom apps would leverage their new silicon.

For this test, each MacBook was setup with the following CreateML settings:

- Problem: Multi-class image classification.

- Model: CreateML image classification (Apple don't tell you what architecture they use, but I'd take a guess it's a ResNet).

- Data size: 7500 training images, 2500 testing images.

- Maximum iterations: 25.

- Data augmentation: Flip, rotate.

| MacBook Air (M1) | MacBook Pro 13-inch (M1) | MacBook Pro 16-inch (Intel) | |

|---|---|---|---|

| Model training time | 11 minutes, 30 seconds | 15 minutes, 30 seconds | 43 minutes, 10 minutes+ (DNF) |

| Starting battery life | 72% | 75% | 31% |

| Ending battery life | 68% | 69% | 0%* |

| Battery life change | -4% | -7% | -31% |

- *The MacBook Pro 16-inch died before testing finished.

Potentially the most surprising result of all the tests is that the M1 MacBook Air won this one by a clear margin, both in training time and battery life. All the while without a fan and one less GPU than the MacBook Pro 13-inch (the MacBook Air I used has a 8 CPU, 7 GPU M1 chip).

It's also quite clear the CreateML app has potentially been optimised for the M1 chip. As, despite having 8 CPU cores and a dedicated GPU, the 16-inch Intel-powered MacBook Pro ran out of juice before finishing the experiment.

Experiment 3: TensorFlow macOS code

During the M1 announcement keynote, Apple claimed their new silicon was capable of "running popular deep learning frameworks such as, TensorFlow" at much greater speeds than previous generations.

Hearing this forced me to sit up a little straighter.

Did they just say TensorFlow? Natively?

I read back through the announcement.

Yes. They did. They said TensorFlow.

Then I found the blog posts by the TensorFlow team and Apple Machine Learning team showcasing the new results on the M1 chips and Intel-based Macs. Turns out, Apple recently released a fork of TensorFlow, tensorflow_macos which allows you to run native TensorFlow code right on your Mac (something which was previously a pain in the ass, actually, not really, I hear PlaidML has made it easier but I haven't tried that).

Naturally, after hearing this news, I had to try it.

By some miracle, I installed Apple's fork of TensorFlow into a Python 3.8 environment without 8-10 hours of troubleshooting and created the following mini-experiments:

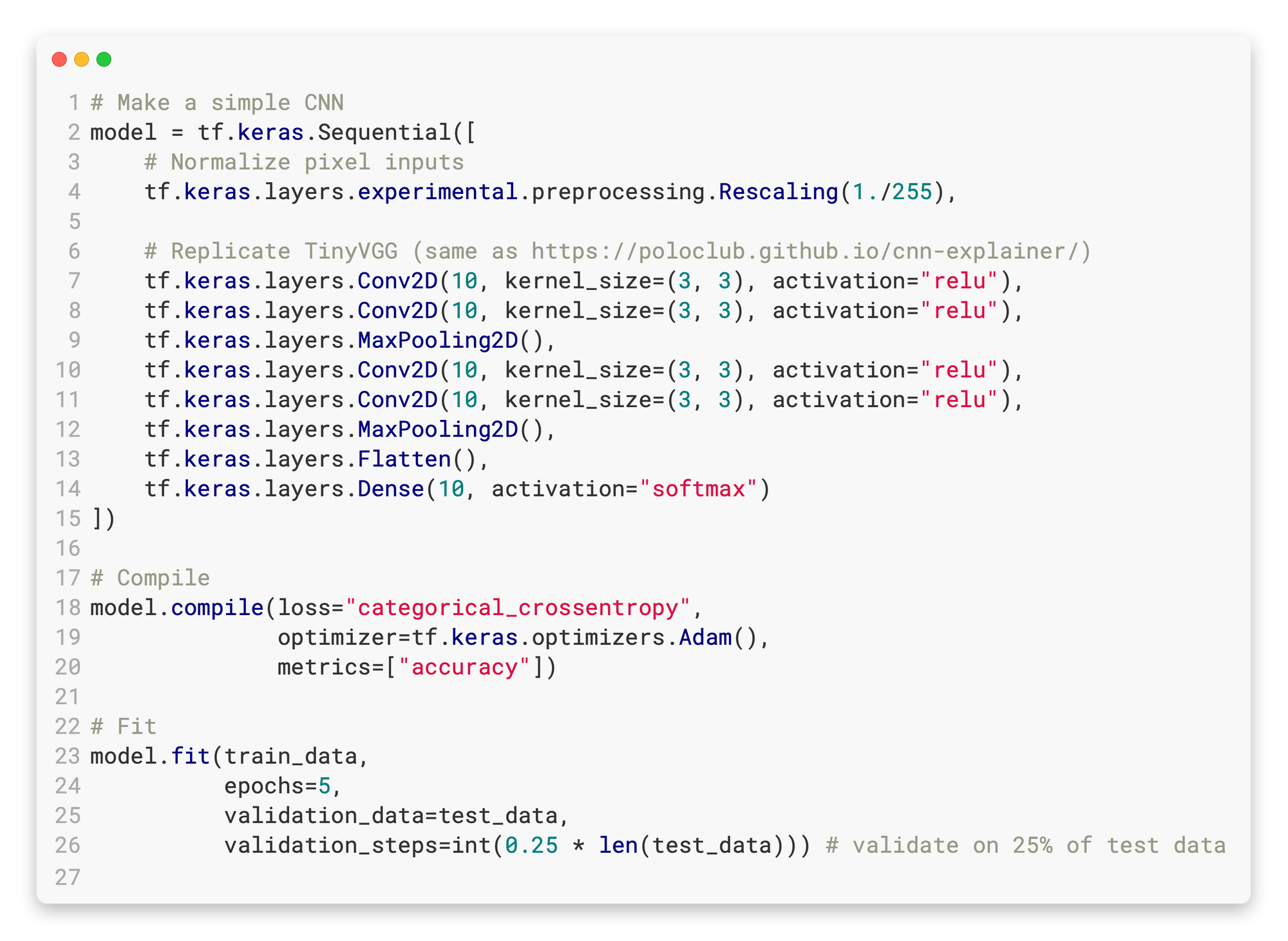

3.1 A basic convolutional neural network (CNN)

I copied the CNN architecture on the CNN explainer website (TinyVGG). And used similar data to the CreateML test.

- Problem: Multi-class image classification.

- Model: TinyVGG (see Google Colab notebook for exact code).

- Data: 750 training images, 2500 testing images.

- Number of classes: 10 (from Food101 dataset).

- Number of epochs: 5.

- Batch size: 32.

3.2 Transfer learning with EfficientNetB0

These days I rarely build models from scratch. I use either an existing untrained architecture and train it on my own data or a pretrained architecture like EfficientNet and fine-tune it on my own data.

- Problem: Multi-class image classification.

- Model: Headless EfficientNetBO (I only trained the top layer, all others were frozen).

- Data: 750 training images, 625 testing images (2500 * 0.25 validation steps).

- Number of classes: 10 (from Food101 dataset).

- Number of epochs: 5.

- Batch size: 4 (the lower batch size was required due to the M1 not having the memory capacity to deal with a higher size, I tried 32, 16, 8 & they all failed).

3.3 tensorflow_macos GitHub benchmark

Browsing Apple's tensorflow_macos GitHub, I came across an issue thread containing a benchmark a fair few people had run on their various machines. So I decided to include it in my testing.

- Problem: Multi-class image classification.

- Model: LeNet.

- Data: 60,000 training images, 10,000 testing images (MNIST).

- Number of classes: 10.

- Number of epochs: 12.

- Batch size: 128.

- Source: https://github.com/apple/tensorflow_macos/issues/25

TensorFlow code results

As well as running the above three experiments on all of the MacBook's I had, I also ran them on a GPU-powered Google Colab instance as a control (my usual workflow is: experiment on Google Colab, scale up on larger cloud servers when needed).

| MacBook Air (M1) | MacBook Pro 13-inch (M1) | MacBook Pro 16-inch (Intel) | Google Colab T4 GPU^ | |

|---|---|---|---|---|

| Basic CNN* | 7-8s/epoch | 7-8s/epoch | 35-41s/epoch | 5s/epoch |

| Transfer learning | 20-21s/epoch | 20-21s/epoch | 59-66s/epoch | 7s/epoch |

tensorflow_macos benchmark |

23-24s/epoch | 25-26s/epoch | 20-21s/epoch | 9s/epoch |

Note: For all models the first epoch is usually the longest due to data loading, so only the speed per epoch after the first epoch have been included.

- ^Google Colab GPU instance used pure TensorFlow rather than

tensorflow_macos.

The Google Colab GPU-powered instance performed the fastest across all three tests.

Notably, the M1 machines significantly outperformed the Intel machine in the Basic CNN and Transfer learning experiments.

However, the Intel powered machine clawed back some ground on the tensorflow_macos benchmark. I believe this was due to explicitly telling TensorFlow to use the GPU, using the lines:

from tensorflow.python.compiler.mlcompute import mlcompute

mlcompute.set_mlc_device(device_name='gpu')

I tried running these lines with the previous two experiments and it didn't make a difference. Perhaps, it's something to do with the different data loading schemes used between the Basic CNN/Transfer learning setup and the tensorflow_macos benchmark.

See all code experiments in the accompanying Google Colab Notebook.

Portability

Odds are if you're buying a laptop, you want to be able to move it around. Perhaps write some words while looking at the beach or code up your latest experiment whilst sipping coffee at your local cafe.

So what we have here is a compilation of the various amounts of battery used over the three experiments, plus a new portability score I've invented.

| MacBook Air (M1) | MacBook Pro 13-inch (M1) | MacBook Pro 16-inch (Intel) | |

|---|---|---|---|

| Starting battery life | 89% | 92% | 87% |

| Ending battery life | 39% | 35% | 65%* |

| Battery life change | -50% | -57% | -122% |

| Portability score** | 64.5 | 79.8 | 244 |

Note: All experiments took place over two days (about 3-hours on the first day and 2-hours on the second day). And the M1 machines were never plugged into charge.

- *16-inch MacBook Pro was charged to full (from 0%) once.

- **Portability score = battery life/weight ratio = battery life lost x weight (lower is better).

The M1 Macs crushed it here. Finishing all tests with over 30% of battery left each. The MacBook Air was again the stand out, using the least amount of power and scoring the lowest on the portability score.

For sale: 16-inch MacBook Pro

Well f*ck...

Anyone want to buy a second-hand close-to-top-spec MacBook Pro 16-inch? Its been well looked after, I promise.

After running the above experiments and using the M1 MacBooks for a couple of weeks, it looks like the graphs Apple showed were pretty close to reality. And all those raving reviews? Well, in my experience, they're correct too.

I originally bought the 16-inch MacBook Pro to be a powerhouse, a speed machine. And it is that. But so are the M1 machines. Except the M1 machines are lighter, quieter and have a better battery life.

The only test the MacBook Pro 16-inch won on was the video rendering test (and of course screen size). And even then, the results weren't dramatically better, certainly not "this machine costs 2.5x more better".

So what next?

I'm keeping the 13-inch MacBook Pro. The little extra boost for video editing won it over the 13-inch Air (plus, I edited the entire video version of this article on the 13-inch Pro without a single hiccup). I'll use it as a portable machine and probably set my 16-inch up as a permanent desktop.

But the Air... Oh the Air... I remember when I worked at Apple Retail, I'd tell customers to get the Air if they were only concerned with word processing and browsing the web. Now you can train machine learning models on it.

Which one should you get?

"I write code, words and browse the web." Or "I just want a portable, capable machine."

Get the MacBook Air, you won't be disappointed. It's what I'd get if I didn't edit videos daily.

"I write code, words, browse the web and edit videos."

From my tests, the 13-inch M1 MacBook Pro or M1 MacBook Air will perform at 70-90% of what a nearly-top-spec Intel 16-inch MacBook Pro can offer, so either of those would be phenomenal. For a slight edge on video editing you might opt for the 13-inch M1 MacBook Pro.

"I want a larger screen size and don't care about cost."

As of now, the only valid reason I'd consider buying an Intel-based 16-inch MacBook Pro would be if screen size was of upmost importance to you and you didn't have a budget.

Screen-size doesn't matter so much to me as most of the time I'm running a single full-screen app or two apps split down the middle of the screen. Or if I do want to run multiple apps, I'll plug my machine into an external monitor (note: for now the M1 Macs only support a single external monitor, so for the 3+ monitor fans out there, you'll need an Intel-based Mac).

This being said, even if you were after a larger screen, I'd hold off and wait for the Apple silicon 16-inch.

Conclusion

As I said, I had no problems with 16-inch MacBook Pro. But in comparison to these new M1 MacBook's, it feels dated.

I haven't been this impressed with a new computer since I first switched from hard drive to solid state drive.

I'm writing this on the 13-inch M1 MacBook Pro and it feels like butter. The little things, the instance wake, the new keyboard style, the native Apple apps, the battery life, they all add up.

If Apple were capable of pulling off these kind of performance improvements with a 1st-generation chip in a laptop (even one without a fan), I can't imagine what's going to happen on the machines without power constraints (the Mac mini hints at the potential here).

How about a 16-inch with an M2?

Hopefully they wait at least another year, I mean, my Intel-based 16-inch isn't even a year old yet...

PS you can see the video version of this article, including all of the tests being run on YouTube: