The Hundred-Page Machine Learning Book Book Review

The start here and continue here of machine learning. 100-pages in 10-minutes. You ready?

The Hundred-Page Machine Learning Book is the book I wish I had when I started learning machine learning.

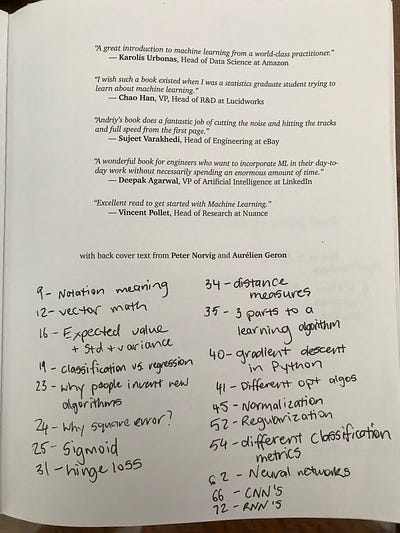

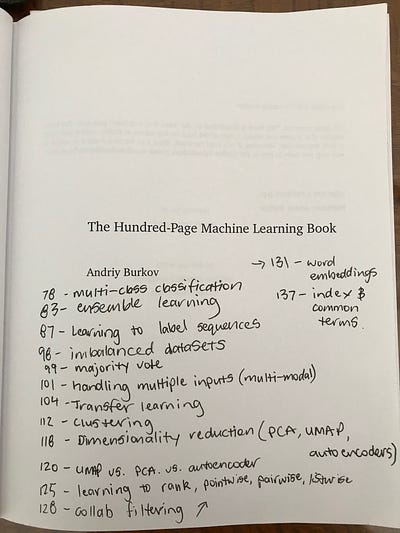

You could read it in a day. It took me longer than a day but I got through it. I took my time. Underlined what stood out, took notes in the front cover, the pages I want to revisit. Finishing it isn’t the point though. The Hundred-Page Machine Learning Book is a reference, something you can keep coming back to. Which is what I plan on doing.

Inline with the book and the author, Andriy Burkov’s style, we’ll keep this article short and to the point.

Should you buy the book?

Yes. But you don’t have to. You can read it first. But you should buy a copy, hold it, read it, sit it on your coffee table. Then when your friends ask, ‘What is machine learning?’, you’ll be able to tell them.

Who is the book for?

Maybe you’re studying data science. Or you’ve heard of machine learning being everywhere and you want to understand what it can do. Or you’re familiar with applying the tools of machine learning but you want to make sure you’re not missing any.

I’ve been studying and practising machine learning for the past two-years. I built my own AI Masters Degree, it led to being a machine learning engineer. This book is part of my curriculum now but if it was out when I started, it would’ve been on there from the beginning.

What previous knowledge do I need for the Hundred-Page Machine Learning book?

Having a little knowledge about math, probability and statistics would be helpful but The Hundred-Page Machine Learning Book has been written a way that you’ll get most of these as you go.

So the answer to this question remains open. I read it from the perspective of a machine learning engineer, I knew some things but learned many more.

If you don’t have a background in machine learning it doesn’t mean you should shy away from it.

I’m treating it as a start here and a continue here for machine learning. Read it once. And if it doesn't make sense, read it again.

Why should you read it?

You’ve seen the headlines, read the advertisements. Machine learning, artificial intelligence, data, they’re everywhere. By reading this article you’ve interacted with these tools dozens of times already. Machine learning is used to recommend content to you online, it helps maintain your phone's battery, it powers the booking system you used for your last flight.

What you don’t know can seem scary. The media does a great job of putting machine learning into one of those too hard buckets. But The Hundred-Page Machine Learning book changes that.

Now in the space of a day, or longer if you’re like me, you’ll be able to decipher what headlines you should pay attention to and the ones you shouldn’t. Or in machine learning terms, the global minimum from the local minimum — don’t worry the book covers this.

Will this book teach you everything about machine learning?

No.

What does it teach then?

What works.

That’s the easiest way of describing it. The field of machine learning is vast, hence traditional books being far more than 100-pages.

But The Hundred-Page Machine Learning Book covers what you should know.

The introduction goes through the different kinds of machine learning.

Supervised learning, the kind where you have data and labels of what that data is. For example, your data may be a series of articles and the labels may be the categories those articles belong to. This is the most common type of machine learning.

Unsupervised learning or when you have data but no labels. Think the same articles but now you don’t know what categories any of them belong to.

Semi-supervised learning is when some of your articles have labels but others don’t.

And reinforcement learning involves teaching an agent (another word for computer program) to navigate a space based on rules and feedback defined by that space. A computer program (agent) which moves chess pieces (navigate) around a chessboard (space) and is rewarded for winning (feedback) is a good example.

Chapter 2 — Making math great again (it always was)

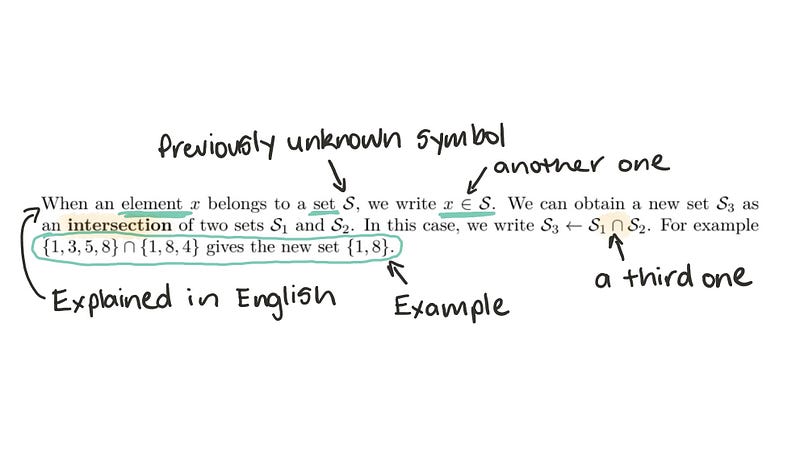

Chapter 2, dives into the Greek symbols you haven’t seen since high school. The ones which come along with any machine learning resource. Once you know what they mean, reading a machine learning paper won’t be as scary.

You’ll find examples like this.

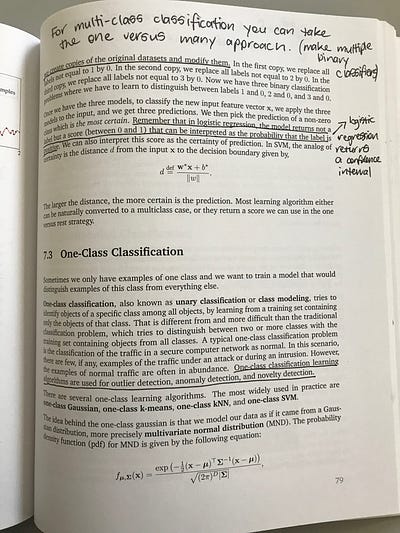

This kind of language is a constant throughout the book. Many of the techno-jargon terms are described in one or two lines without the fluff.

What’s a classification problem?

Classification is a problem of automatically assigning a label to an unlabelled example. Spam detection is a famous example of classification.

What’s a regression problem?

Regression is a problem of predicting a real-valued label (often called target) given an unlabelled example. Estimating house price valuation based on house features, such as area, the number of bedrooms, location and so on is a famous example of regression.

I took these from the book.

Chapter 3 and 4 — What are the best machine learning algorithms? Why?

Chapter 3 and 4 demonstrate some of the most powerful machine learning algorithms and what makes them learning algorithms.

You’ll find working examples of Linear Regression, Logistic Regression, Decision Tree Learning, Support Vector Machines and k-Nearest Neighbours.

There’s plenty of mathematical notation but nothing you’re not equipped to handle after Chapter 2.

Burkov does an amazing job of setting up the theory, explaining a problem and then pitching a solution for each of the algorithms.

With this, you’ll start to see why inventing a new algorithm is a rare practice. It’s because the existing ones are good at what they do. And as a budding machine learning engineer, your role is to figure out how they can be applied to your problem.

Chapter 5 — Basic Practice (Level 1 Machine Learning)

Now you’ve seen examples of the most useful machine learning algorithms, how do you apply them? How do you measure their effectiveness? What should you do if they’re working too well (overfitting)? Or not working well enough (underfitting)?

You’ll see how much of a data scientists or machine learning engineers time is making sure the data is ready to be used with a learning algorithm.

What does this mean?

It means turning data into numbers (computers don’t do well with anything else), dealing with missing data (you can’t learn on nothing), making sure it’s all in the same format, combining different pieces of data or removing them to get more out of what you have (feature engineering) and more.

Then what?

Once your data is ready, you have to choose the right learning algorithm. Different algorithms work better on different problems.

The book covers this.

What’s next?

You assess what your learning algorithm learned. This is the most important thing you’ll have to communicate to others.

It often means boiling weeks of work down into one metric. So you want to make sure you’ve got it right.

99.99% accuracy looks good. But what’s the precision and recall? Or the area under the ROC curve (AUC)? Sometimes these are more important. The back end of Chapter 5 explains why.

Chapter 6 — The machine learning Paradigm taking the world by storm, neural networks and deep learning

You’ve seen the pictures. Images of the brain with deep learning neural networks next to them. Some say they try to mimic the brain others argue there’s no relation.

What matters is how you can use them, what they’re actually made up of not what they’re kind of made of.

A neural network is a combination of linear and non-linear functions. Straight lines and non-straight lines. Using this combination, you can draw (model) anything.

The Hundred-Page Machine Learning Book goes through the most useful examples of neural networks and deep learning such as, Feed-Forward Neural Networks, Convolutional Neural Networks (usually used for images) and Recurrent Neural Networks (usually used for sequences, like words in an article or notes in a song).

Deep learning is what you’ll commonly hear referred to as AI. But after reading this book, you’ll realise as much as it’s AI, it’s also a combination of the different mathematical functions you’ve been learning about in previous chapters.

Chapter 7 & 8 — Using what you’ve learned

Now you’ve got all these tools, how and when should you use them?

If you’ve got articles you need an algorithm to label for you, which one should you use?

If you’ve only got two categories of articles, sport and news, you’ve got a binary problem. If you’ve got more, sport, news, politics, science, you’ve got a multi-class classification problem.

What if an article could have more than one label? One about science and economics. That’s a multi-label problem.

How about translating your articles from English to Spanish? That’s a sequence-to-sequence problem, a sequence of English words to a sequence of Spanish words.

Chapter 7 covers these along with ensemble learning (using more than one model to predict the same thing), regression problems, one-shot learning, semi-supervised learning and more.

Alright.

So you’ve got a bit of an understanding on what algorithm you can use when. What happens next?

Chapter 8 dives into some of the challenges and techniques you’ll come across with experience.

Imbalanced classes is the challenge of having more data for one label and not enough for another. Think of our article problem but this time we have 1,000 sports articles and only 10 science articles. What should you do here?

Are many hands better than one? Combining models trying to predict the same thing can lead to better results. What are the best ways to do this?

And if one of your models already knows something, how can you use this in another one? This practice is called Transfer Learning. You likely do it all the time. Taking what you know in one domain and using it in another. Transfer Learning does the same but with neural networks. If your neural network knows what order words in Wikipedia articles appear, can it be used to help classify your articles?

What if you have multiple inputs to a model, like text and images? Or multiple outputs, like whether or not your target appears in an image (binary classification) and if it does, where (the coordinates)?

The book covers these.

Chapter 9 & 10 — Learning without labels and other forms of learning

Unsupervised learning is when your data doesn’t have labels. It’s a hard problem because you don’t have a ground truth to judge your model against.

The book looks at two ways of dealing with unlabelled data, density estimation and clustering.

Density estimation tries to specify the probability of a sample falling in a range of values as opposed to taking on a single value.

Clustering aims to group samples which are similar together. For example, if you had unlabelled articles you’d expect ones on sports to be clustered closer together than articles on science (once they’ve been converted to numbers).

Even if you did have labels, another problem you’ll face is having too many variables for the model to learn and not enough samples of each. The practice of fixing this is called dimensionality reduction. In other words, reducing the number of things your model has to learn but still maintaining the quality of the data.

To do this, you’ll be looking at using principal component analysis (PCA), uniform manifold approximation and projection (UMAP) or autoencoders.

These sound intimidating but you’ve built the groundwork to understand them in the previous chapters.

The penultimate chapter goes through other forms of learning such as learning to rank. As in, what Google uses to return search results.

Learning to recommend, as in what Medium uses to recommend you articles to read.

And self-supervised learning, in the case of word embeddings which are created by an algorithm reading text and remembering which words appear in the presence of others. It’s self-supervised because the presence of words next to each other are the labels. As in, dog is more likely to appear in a sentence with the word pet than the word car.

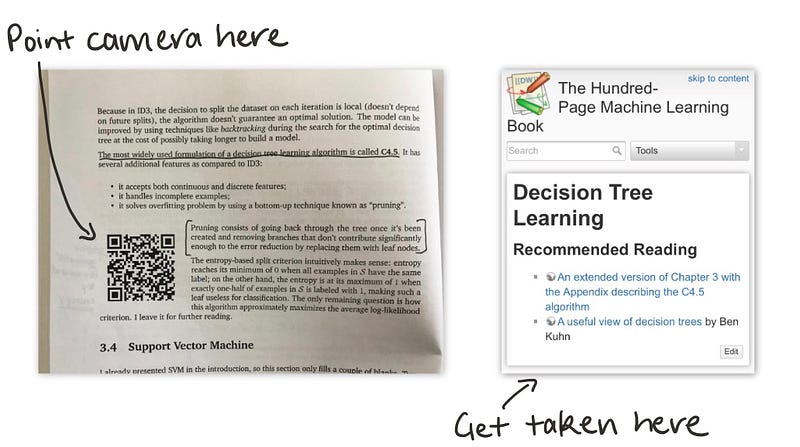

The book that keeps giving: The accompanying wiki

The Hundred-Page Machine Learning Book is covered with QR codes. For those after extra-curriculum, the QR codes link to accompanying documentation for each chapter. The extra material includes code examples, papers and references where you can dive deeper.

The best thing?

Burkov updates the wiki himself with new material. Further compounding the start here and continue here for machine learning label.

What doesn’t it cover?

Everything in machine learning. Those books are 1000+ pages. But the topics above are more than enough to get you started and keep you going.

Chapter 11 — What wasn’t covered

The topics which aren’t covered in-depth in the book are the ones which haven’t yet proven effective in a practical setting (doesn’t mean they can’t be), aren’t as widespread as the techniques above or are still heavily under research.

These include reinforcement learning, topic modelling, generative adversarial networks (GANs) and a few others.

Conclusion

This article started out too long. I went back and edited out the parts which were excess. Burkov inspired me.

If you want to get started in machine learning or if you’re a machine learning practitioner like me and you want to make sure what you’re practising is in line with what works. Get The Hundred-Page Machine Learning Book.

Read it, buy it, reread it.

You can find a video version of this article on YouTube. Otherwise, if you have any other questions, feel free to reach out or sign up for updates on my work.